ETL Interview Breakdown: How Data Engineers Are Tested

Why "Build a pipeline" isn’t really what they’re asking

Let’s Get Real About ETL Interviews

You walk into a Data Engineering interview. They ask:

“Can you walk me through an ETL pipeline you’ve built?”

Seems basic, right?

But here’s the catch: they’re not looking for just a tool dump.

They’re trying to reverse-engineer your thinking.

You’ve now built ETL pipelines, cleaned real data, and understood how batch vs streaming works.

Today’s goal is simple. In Zero2DataEngineer breakdown, we’ll decode how ETL interview questions are framed, what they’re secretly testing, and how to structure your answers like a pro — even if you’ve never worked at FAANG.

Whether you’re applying for a Data Engineer, Analytics Engineer, or even Backend-heavy role — ETL questions will show up.

Here’s how to answer them like someone who’s done it before.

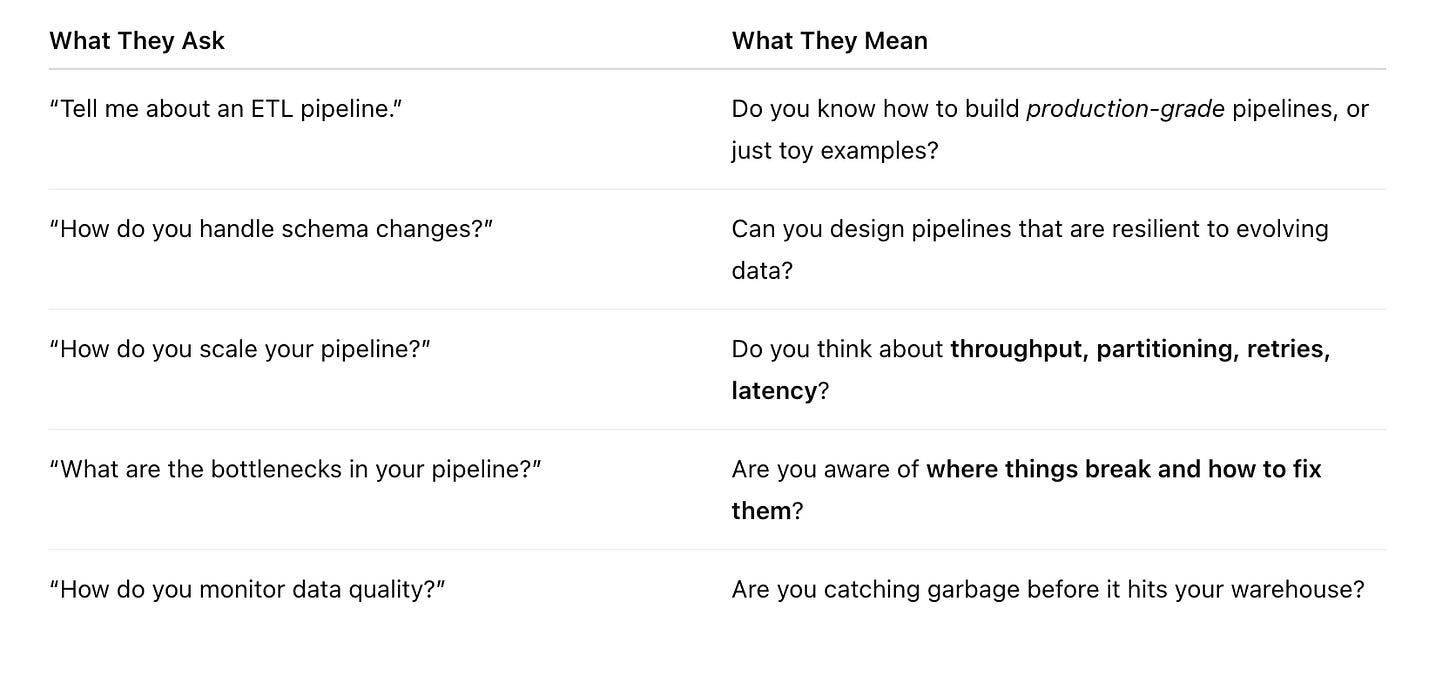

How ETL Interview Questions Are Really Framed

They won’t ask:

“What is ETL?”

They’ll ask:

How would you design a pipeline to load millions of rows from an external source?

What happens if your load step fails halfway through?

How do you make your pipeline idempotent?

When do you use batch vs real-time ingestion?

The trick is to answer like a system thinker, not just a coder.

Interview Answer Formula (Reframe Your Thinking)

Use this ETL STAR + Stack Formula when answering:

Situation: What was the use case?

Tool stack: Which tools did you choose and why?

Architecture: Show the pipeline stages.

Resilience: How did you handle failures, alerts, schema drift?

+ Stack Justification: Why this combo (Airflow + S3 + Spark etc.)?

Pro tip: If you haven’t built one end-to-end yet, use this:

“Here’s how I would design it for a [use case].”

Then walk them through your design — intelligently, not hypothetically.

Master These Real ETL Questions Before Your Next Interview

Q1. What are some common challenges in ETL pipelines?

1. Handling bad records

2. Schema changes

3. Late-arriving data

4. Dependencies & retries

Sample Answer:

“One of the biggest challenges I’ve faced is handling schema drift — especially when upstream sources silently change a column name or data type. I’ve built schema validation into the extraction step using Great Expectations and version control through Glue Catalog.

I’ve also handled bad records by logging and quarantining them into a separate S3 bucket with alerting. For late-arriving data, I design pipelines to be idempotent — using UPSERT logic or late data windows. And for dependencies, I always make sure DAGs have proper

depends_on_past, retry logic, and failure alerts configured.”